New Study: The 130 Day Indexing Rule

At Indexing Insight we've analysed 1.4 million pages to understand if the 130 Day Indexing rule is true.

Subscribe to the newsletter to get more unique indexing insight straight to your inbox 👇.

If a page hasn’t been crawled in the last 130 days, it gets deindexed.

This is the idea that Alexis Rylko put forward in his article: Google and the 130-Day Rule. Alexis identified that by using Days Since Last Crawl metric in Screaming Frog + URL Inspection API you can quickly identify pages at risk of becoming deindexed.

Pages are at risk of being deindexed if they have not been crawled in 130 days.

At Indexing Insight we track Days Since Last Crawl for every URL we monitor for over 1 million pages. And decided to run our own study to see if there is any truth to 130 day indexing rule.

Side note: The 130 day indexing rule isn’t a new idea. A similar rule was identified by Jolle Lahr-Eigen and Behrend v. Hülsen who found that Googlebot had a 129 days cut off in its crawling behavior in a customer project in January 2024.

💽 Methodology

The indexing data pulled in this study is from Indexing Insight. Here are a few more things to keep in mind when looking at the results:

👥 Small study: The study is based on 18 websites that use Indexing Insight of various sizes, industry types and brand authority.

⛰️ 1.4 million pages monitored: The total number of pages used in this study is 1.4 million and aggregated into categories and analysed to identify trends.

🤑 Important pages: The websites using our tool are not always monitoring ALL their pages, but they monitor the most important traffic and revenue-driving pages.

📍 Submitted via XML sitemaps: The important pages are submitted to our tool via XML sitemaps and monitored daily.

🔎 URL Inspection API: The Days Since Last Crawl metric is calculated using the Last Crawl Time metric for each page is pulled using the URL Inspection API.

🗓️ Data pulled at the end of March: The indexing states for all pages were pulled on 17/04/2025.

Only pages with last crawl time included: This study has included only pages that have a last crawl time from the URL Inspection API for both indexed or not indexed pages.

Quality type of indexing states: The data has been filtered to only look at the following quality indexing state types: ‘Submitted and indexed’, ‘Crawled - currently not indexed’, ‘Discovered - currently not indexed’ and ‘URL is unknown to Google’. We’ve filtered out any technical or duplication indexing errors.

🕵️ Findings

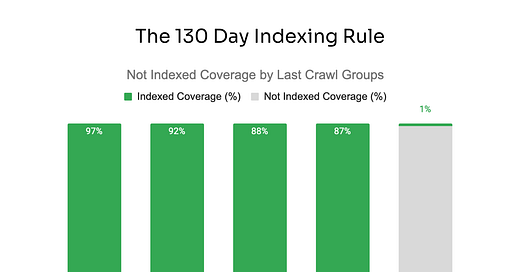

The 130-day indexing rule is true. BUT it’s more of an indicator than a hard rule.

Our data from 1.4 million pages across multiple websites shows that if a page has not been crawled in the last 130 days then there is a 99% chance the page is Not Indexed.

However, there are Not Indexed pages crawled in less than 130 days.

This means that the 130-day rule is not a hard rule but more of an indicator that your pages might be deindexed by Google.

The data does show that the longer it takes for Googlebot to crawl a page, the greater the chance that the page will be Not Indexed. But after 130 days, the number of Not Indexed pages jumps from around 10% to 99%.

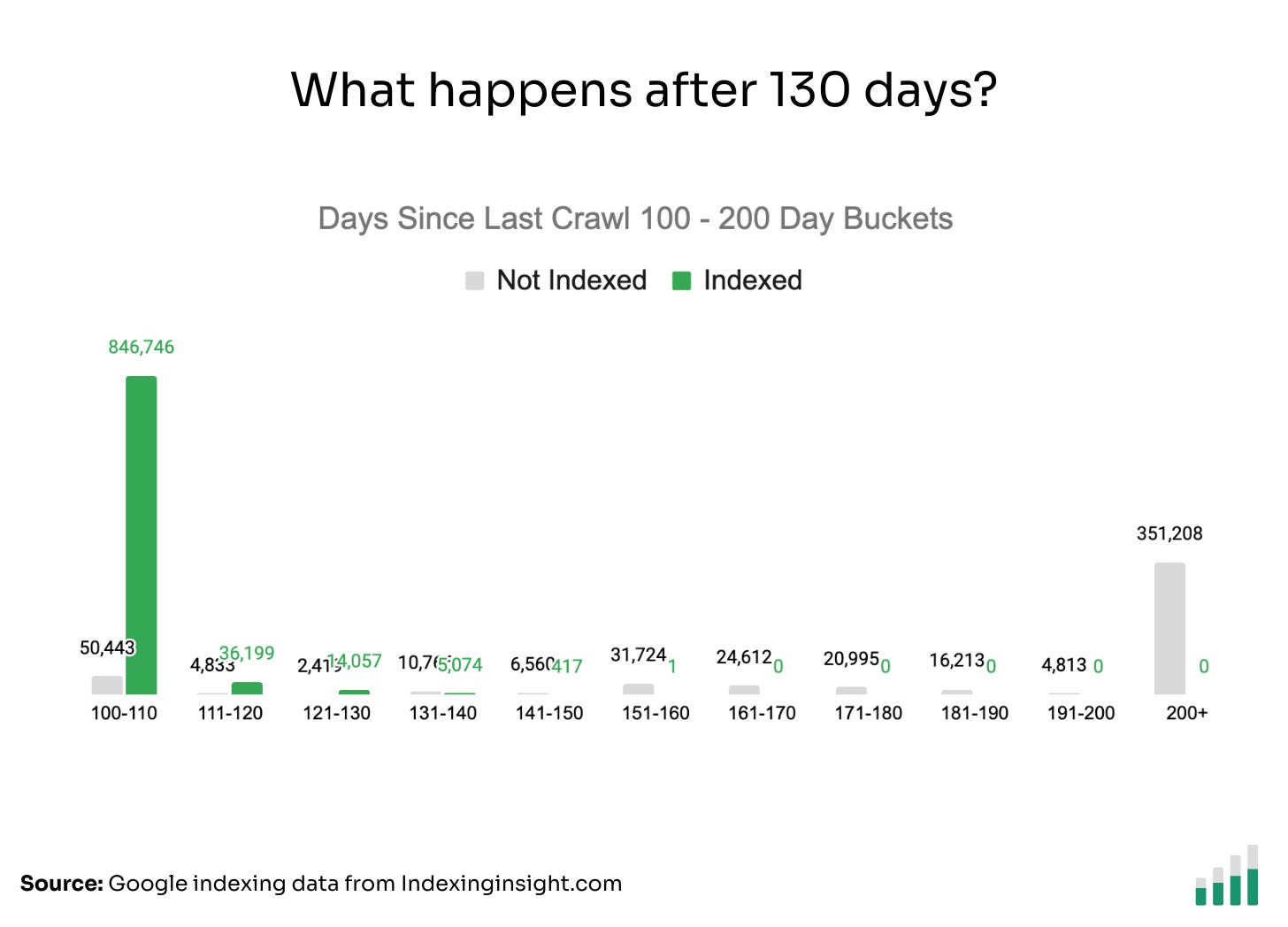

Below is the raw data to understand the scale of the pages in each category.

🤷♂️ What happens after 130 days?

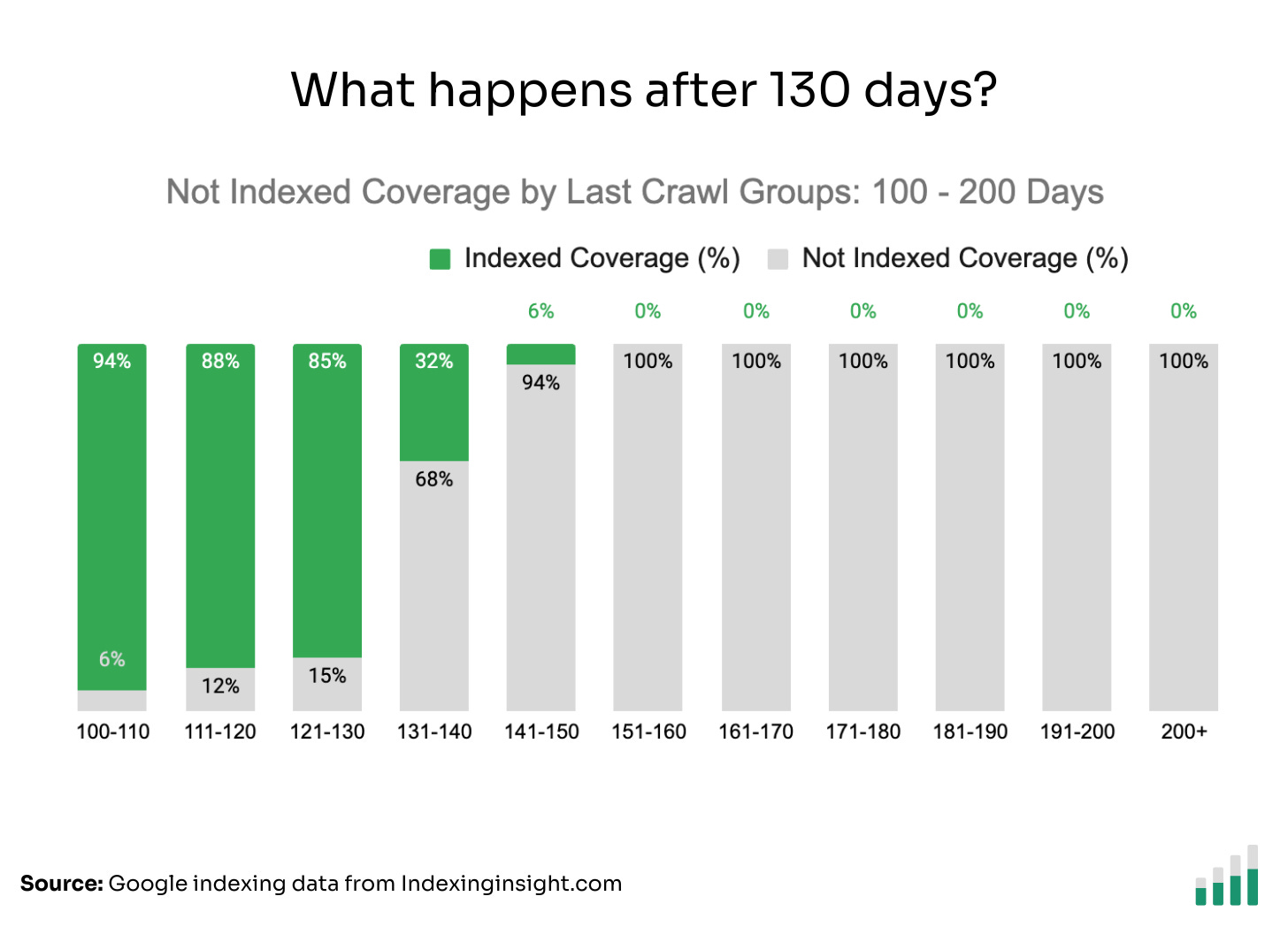

We broke down the data into last crawl buckets between 100 - 200 days.

The data shows that between 100 - 130 days the index coverage is between 94% - 85%.

But, after 131 days, the Not Index coverage shoots up.

The Not Indexed coverage state goes from 68% to 100% between 131 and 151 days. There are still pages indexed after 131 days, but the Index coverage reduces significantly between 131 - 150 days.

After 151 days, there are 0 indexed pages.

Below is the raw data to understand the scale of the pages in each crawl bucket.

🧠 Final Thoughts

The 130-day indexing rule is an indication that your pages will get deindexed.

However, it’s not a hard rule. There will be Not Indexed pages that can be crawled in the last 130 days. Based on the data, 2 rules stand out when it comes to tracking days since last crawl:

🔴 130-day rule: If a page hasn’t been crawled in the last 130 days, then there is a 99% chance the page will not be indexed.

🟢 30-day rule: If the page has been crawled in the last 30 days, then there is a 97% chance the page is indexed.

The longer it takes for Googlebot to crawl your pages (130+ days), the greater the chance the pages will be not indexed.

This shouldn’t come as a surprise to many experienced SEO professionals.

The idea of crawl optimisation and tracking days since last crawl is not new. Over the last 10 years, many SEO professionals like AJ Kohn have talked about the idea of CrawlRank. And Dawn Anderson has provided deep technical explanations on how Googlebot crawling tiers work.

To summarise their work in a nutshell:

Pages crawled less frequently compared to your competitors receive less SEO traffic. You win if you get your important pages crawled more often by Googlebot than your competition.

The issue has always been tracking days since the last crawl for many websites at scale and identifying the exact length of time when pages are deindexed.

However, at Indexing Insight, we can automatically track the last crawl time for every page we monitor AND calculate the days since last crawl for every page.

What does this mean?

It means very soon we’ll be able to add new reports to Indexing Insight that allow customers to, at scale, identify which important pages are at risk of being deindexed. And allow you to monitor how long it takes for Googlebot to crawl important pages for your website.

📚 Further Reading

Crawl Budget Optimisation: You are what Googlebot Eats by AJ John

Large site owner's guide to managing your crawl budget by Google

Negotiating crawl budget with googlebots by Dawn Anderson

Indexing Insight is a Google indexing intelligence tool for SEO teams who want to identify, prioritise and fix indexing issues at scale.

Watch a demo of the tool below to learn more 👇.