How Google Search Console misreports ‘URL is unknown to Google’

Why 'URL is Unknown to Google' missing from the page indexing report

Indexing Insight helps you monitor Google indexing for large-scale websites with 100,000 to 1 million pages. Check out a demo of the tool below 👇.

Google Search Console is misreporting ‘URL is unknown to Google’.

The page indexing report in Google Search Console (GSC) misreports important historically crawled and indexed pages with the indexing state ‘URL is unknown to Google’.

It groups these pages under the ‘Discovered - currently not indexed’ report.

In this newsletter, I'll show examples of how GSC misreports the pages have changed their indexing state to ‘URL is unknown to Google’.

Let's dive in.

🌊 The hidden changes beneath the surface

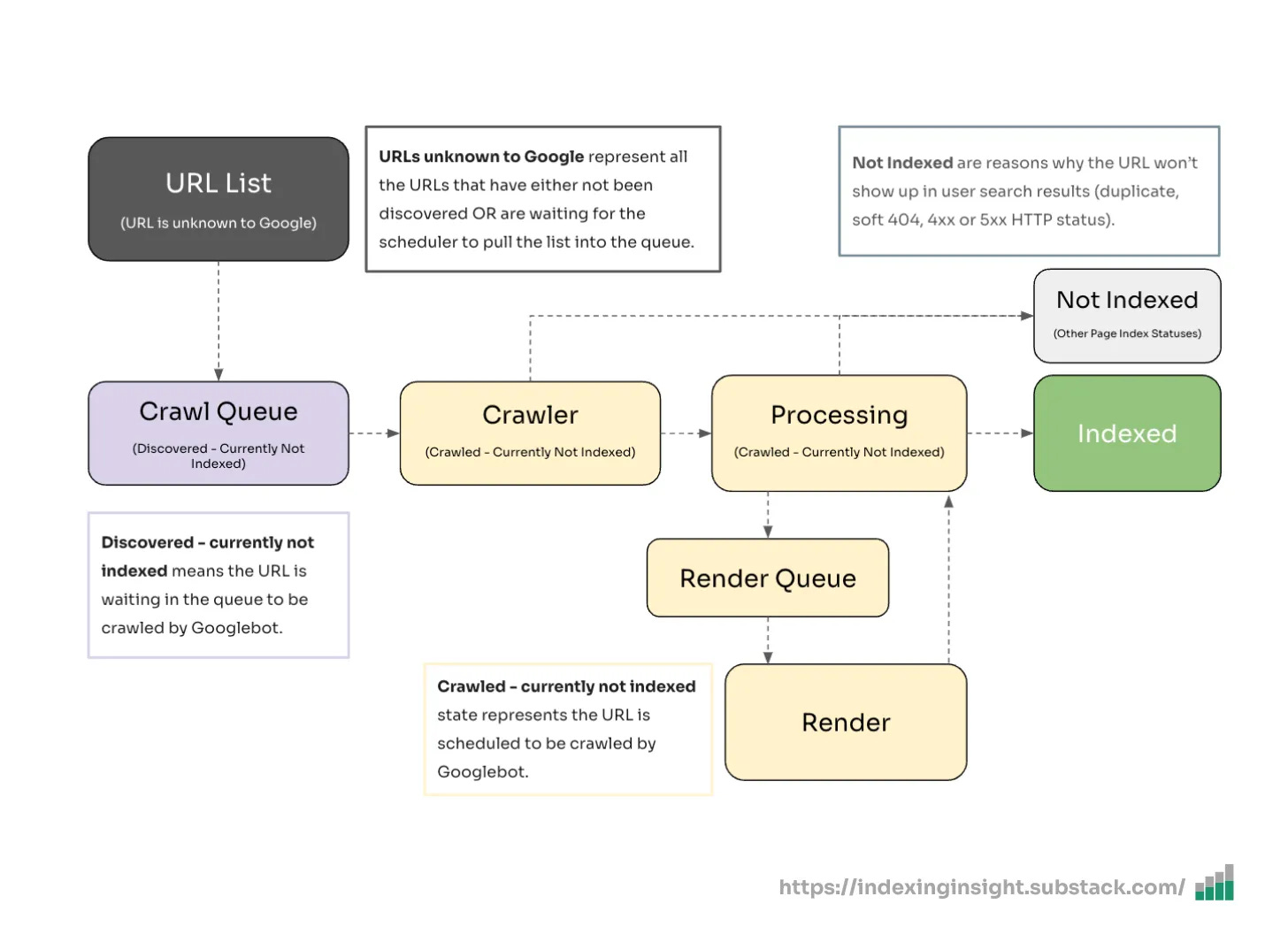

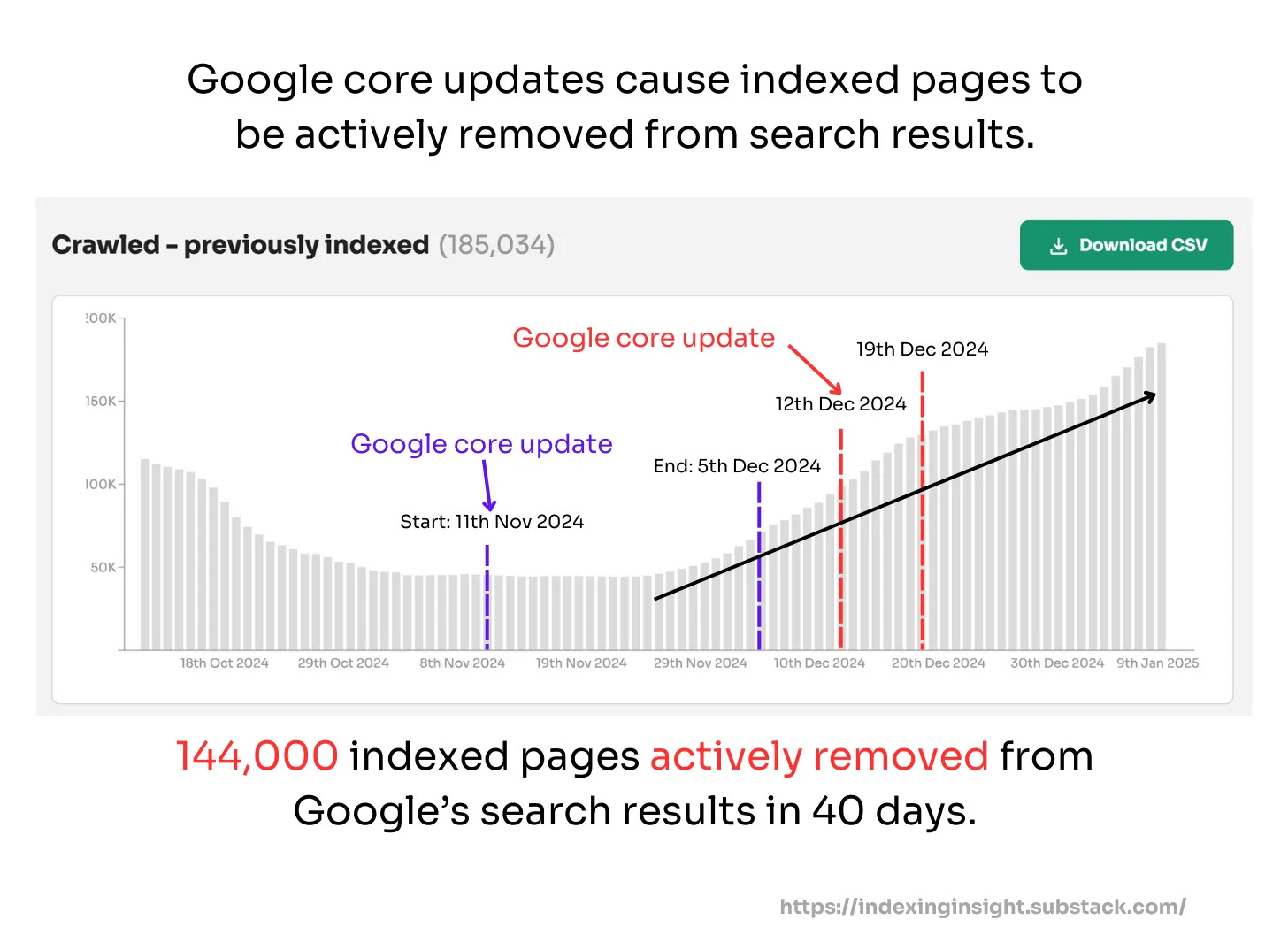

Google core updates impact crawling and indexing.

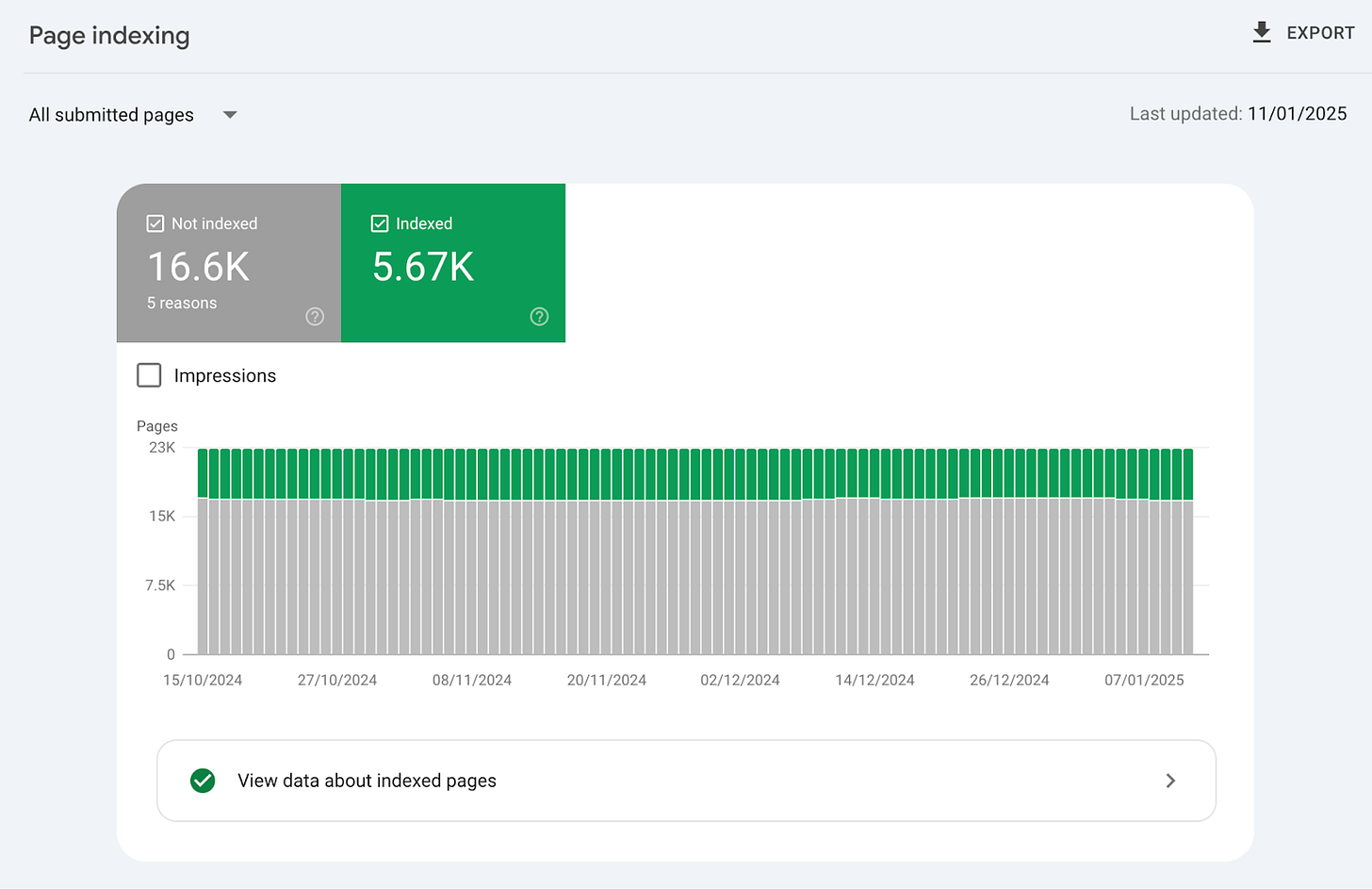

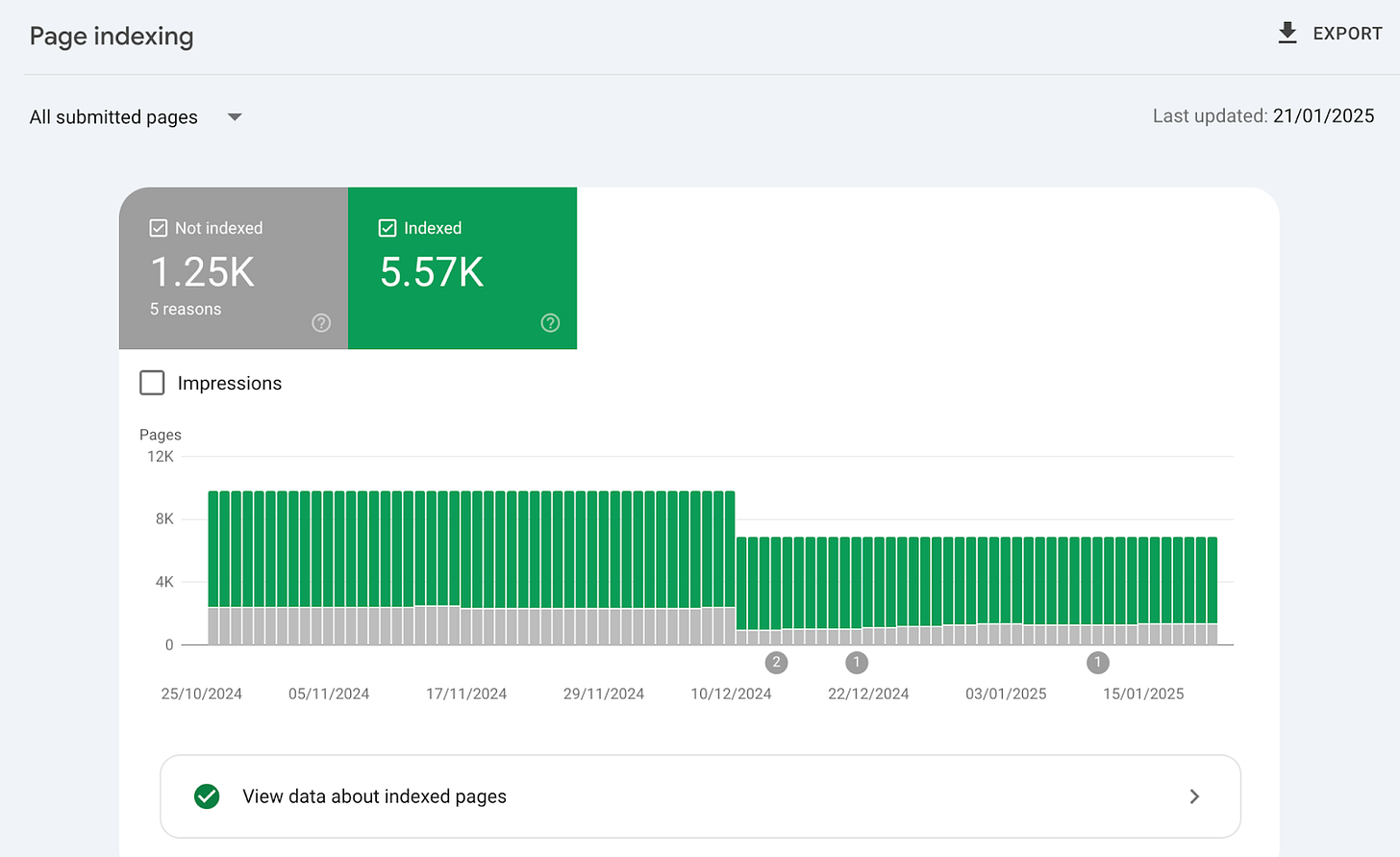

However, you might miss the impact if you check your website’s indexing data in your Google Search Console > Page Indexing report.

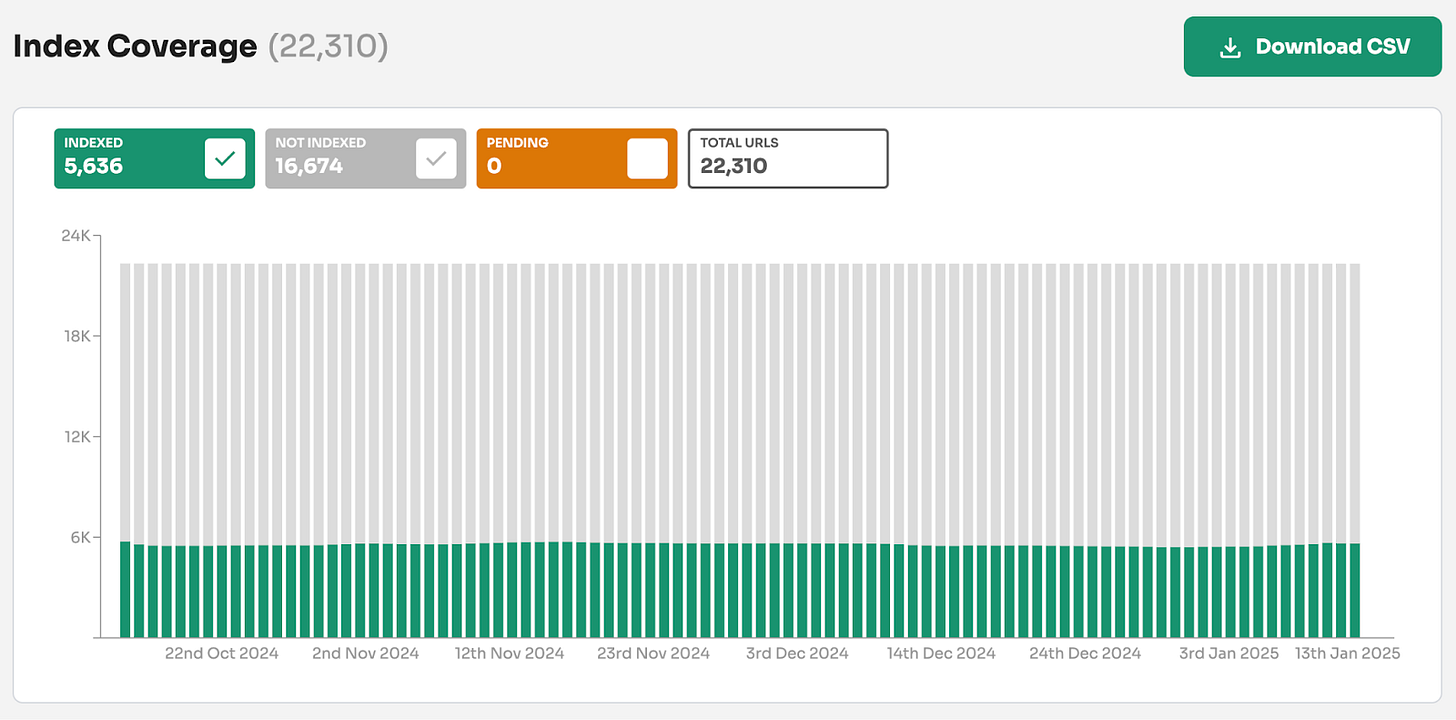

As you can see, nothing has changed on the surface.

But dig 1 level deeper. You can see the impact of Google core update on indexing.

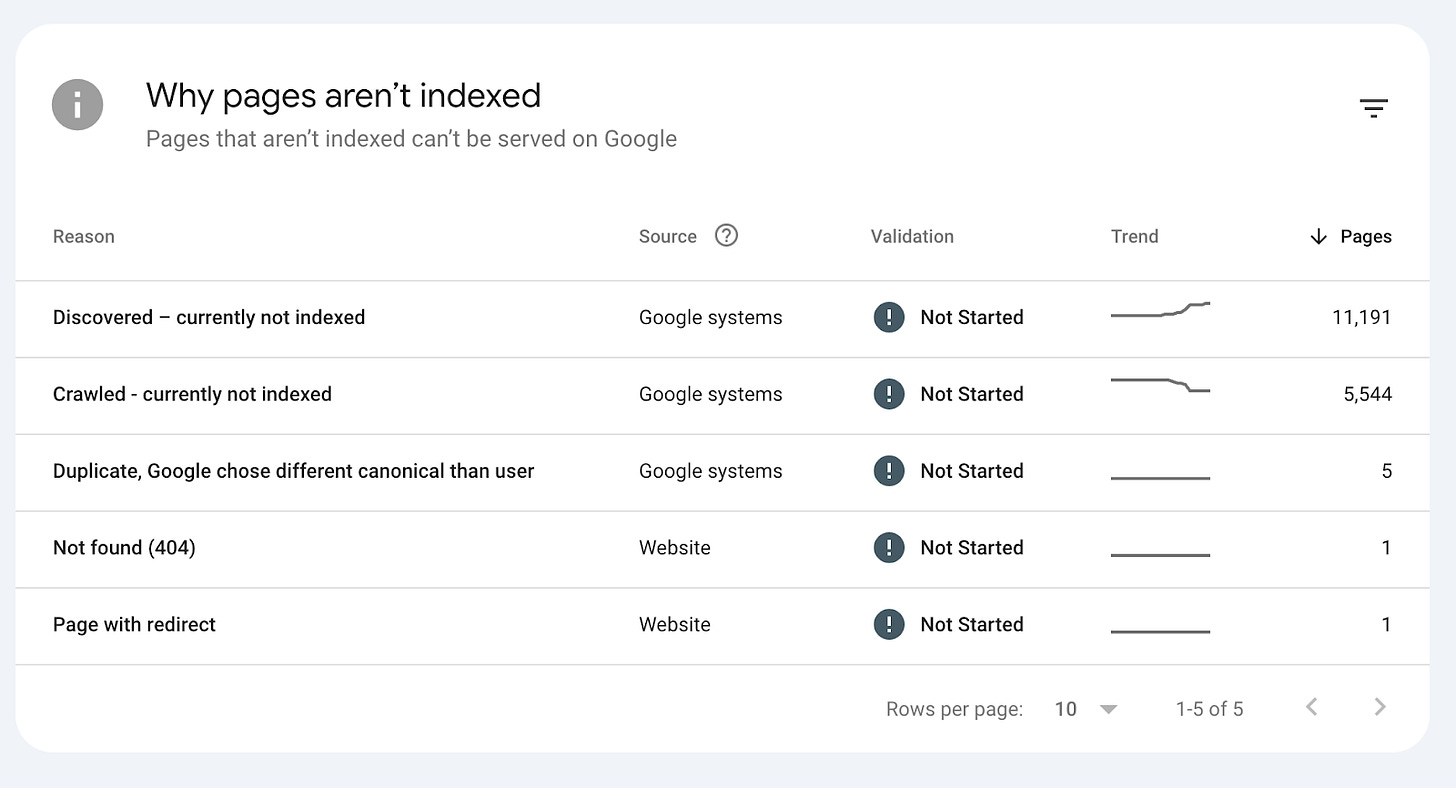

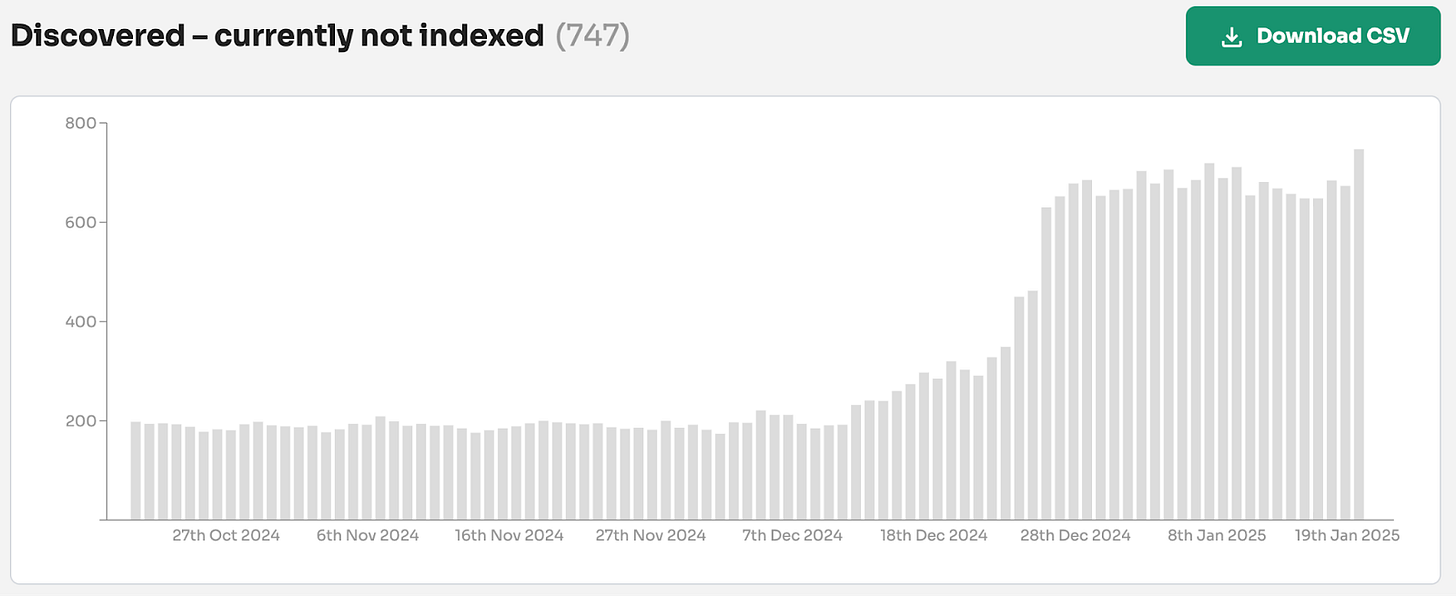

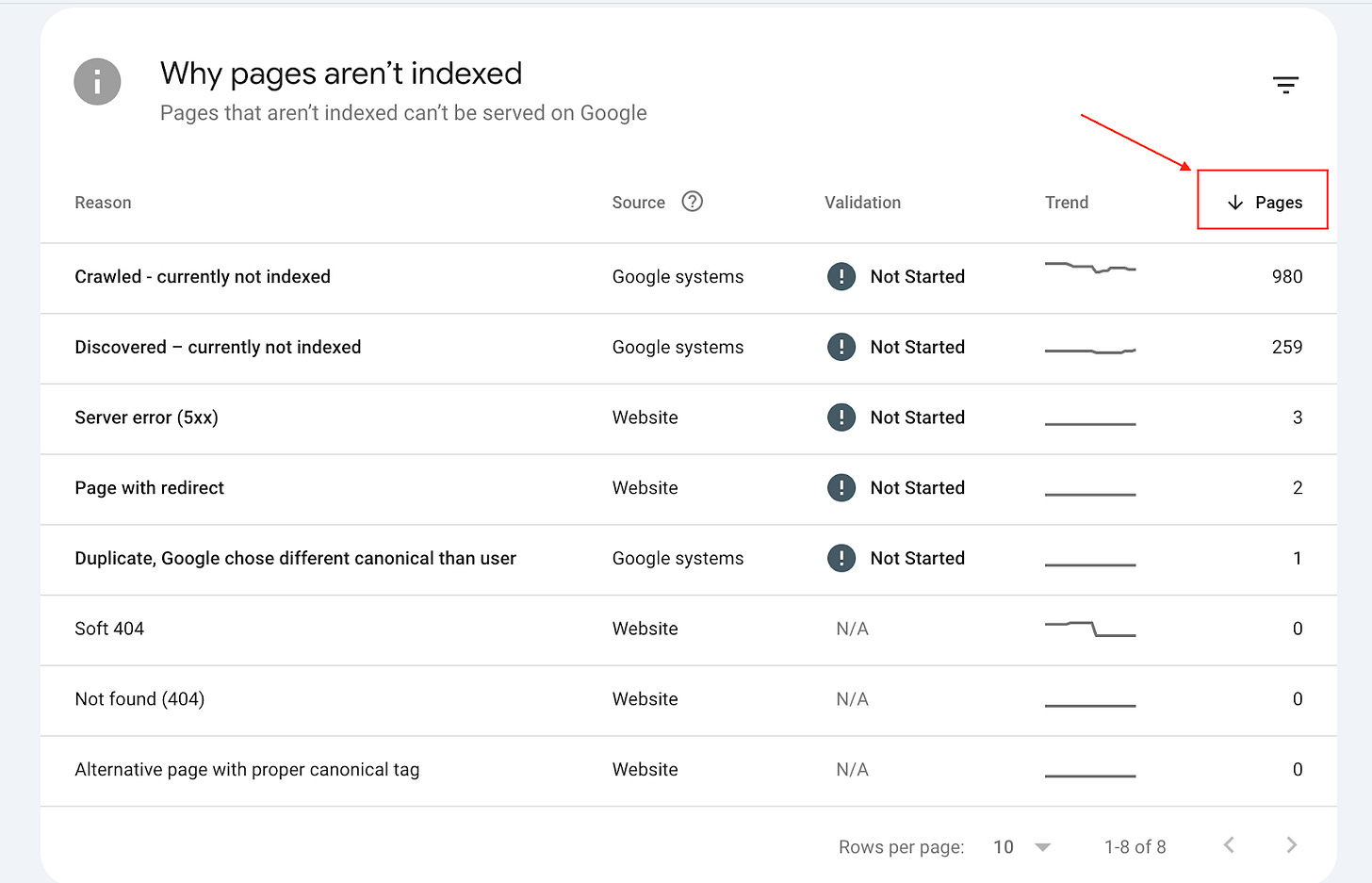

If you visit the ‘Why pages aren’t indexed’ reports, you can see a decline in the ‘Crawled - currently not indexed’ and an increase in ‘Discovered - currently not indexed’.

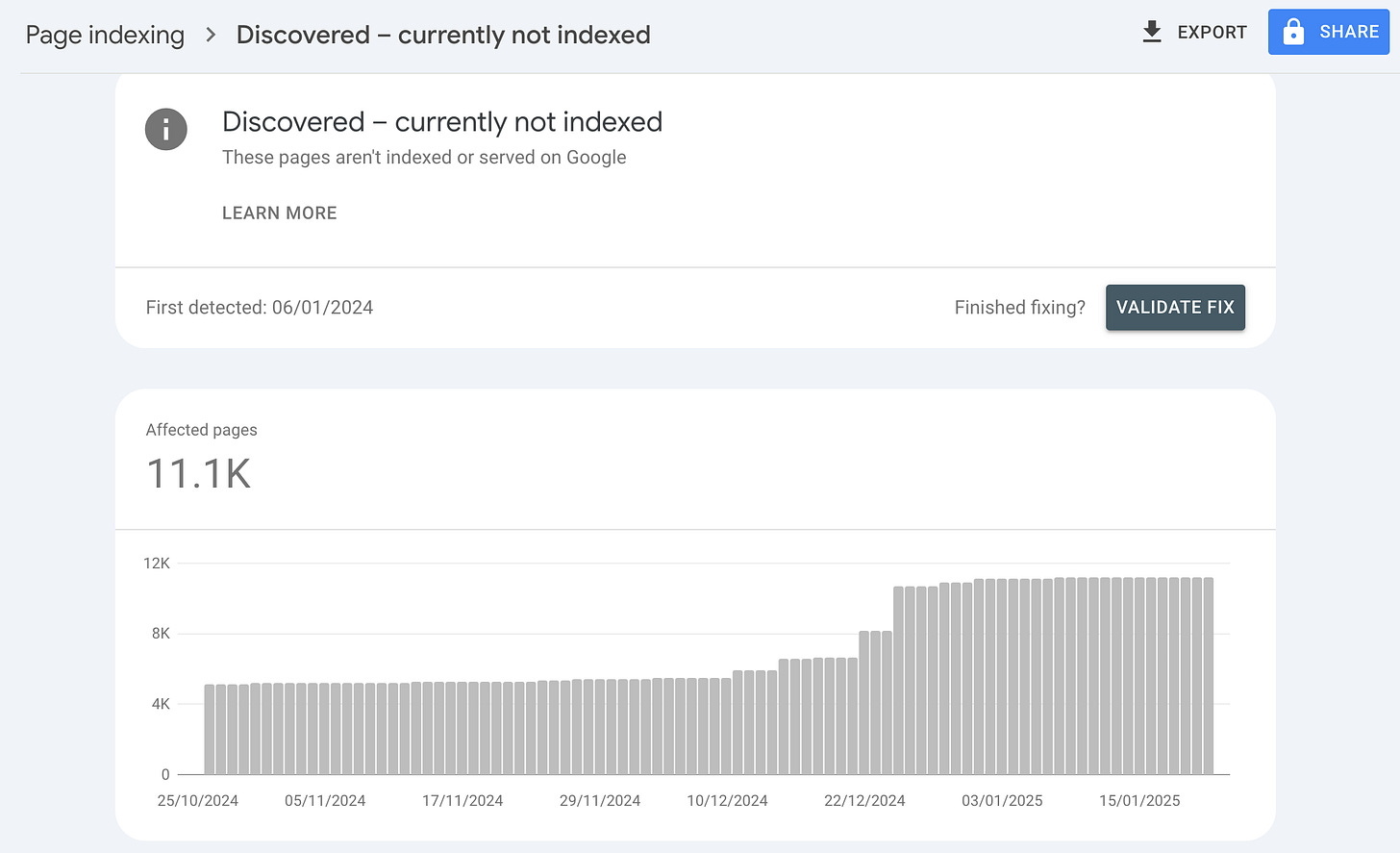

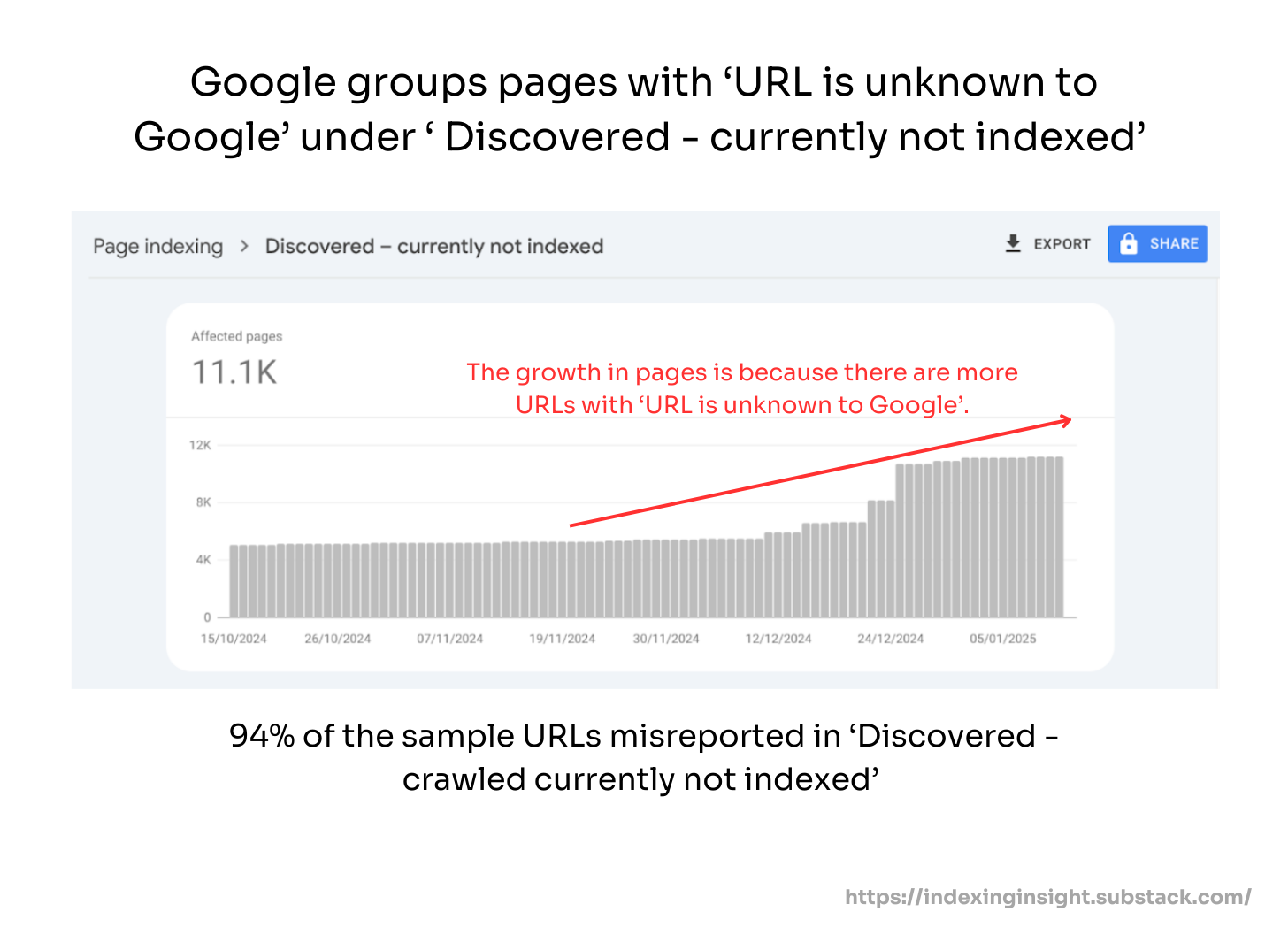

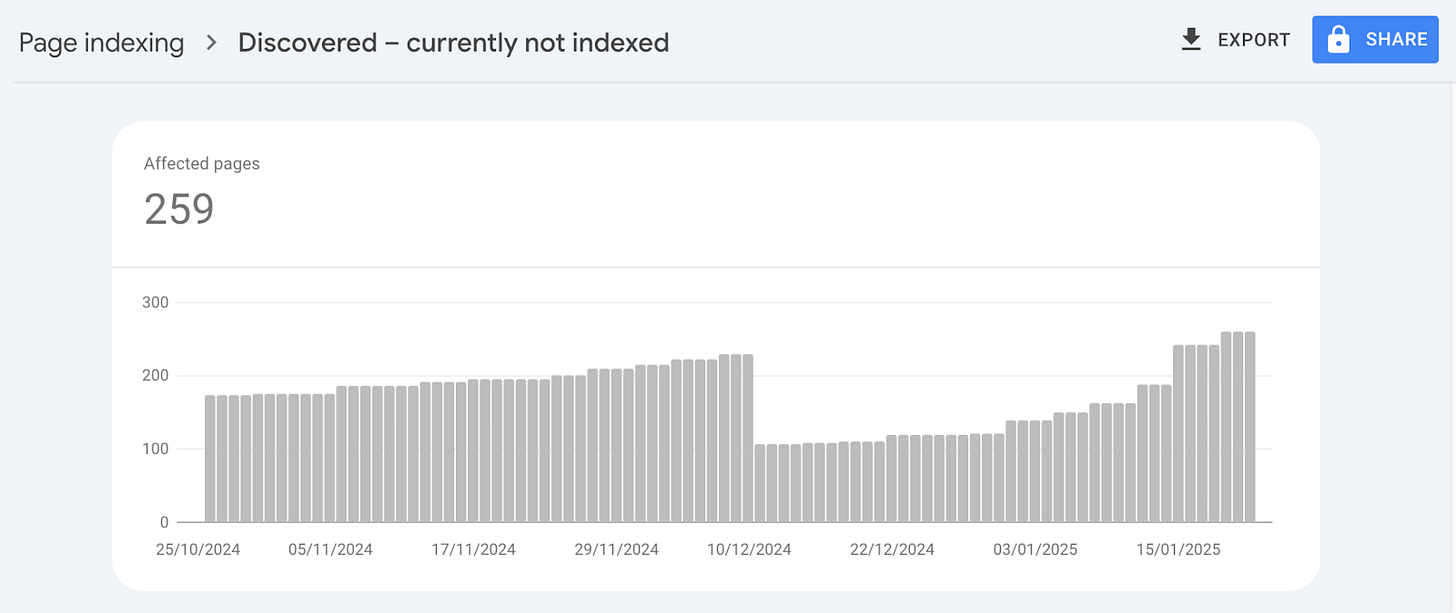

If we look at the ‘Discovered—currently not indexed’ report, we can see that the number of pages has increased after a Google core update.

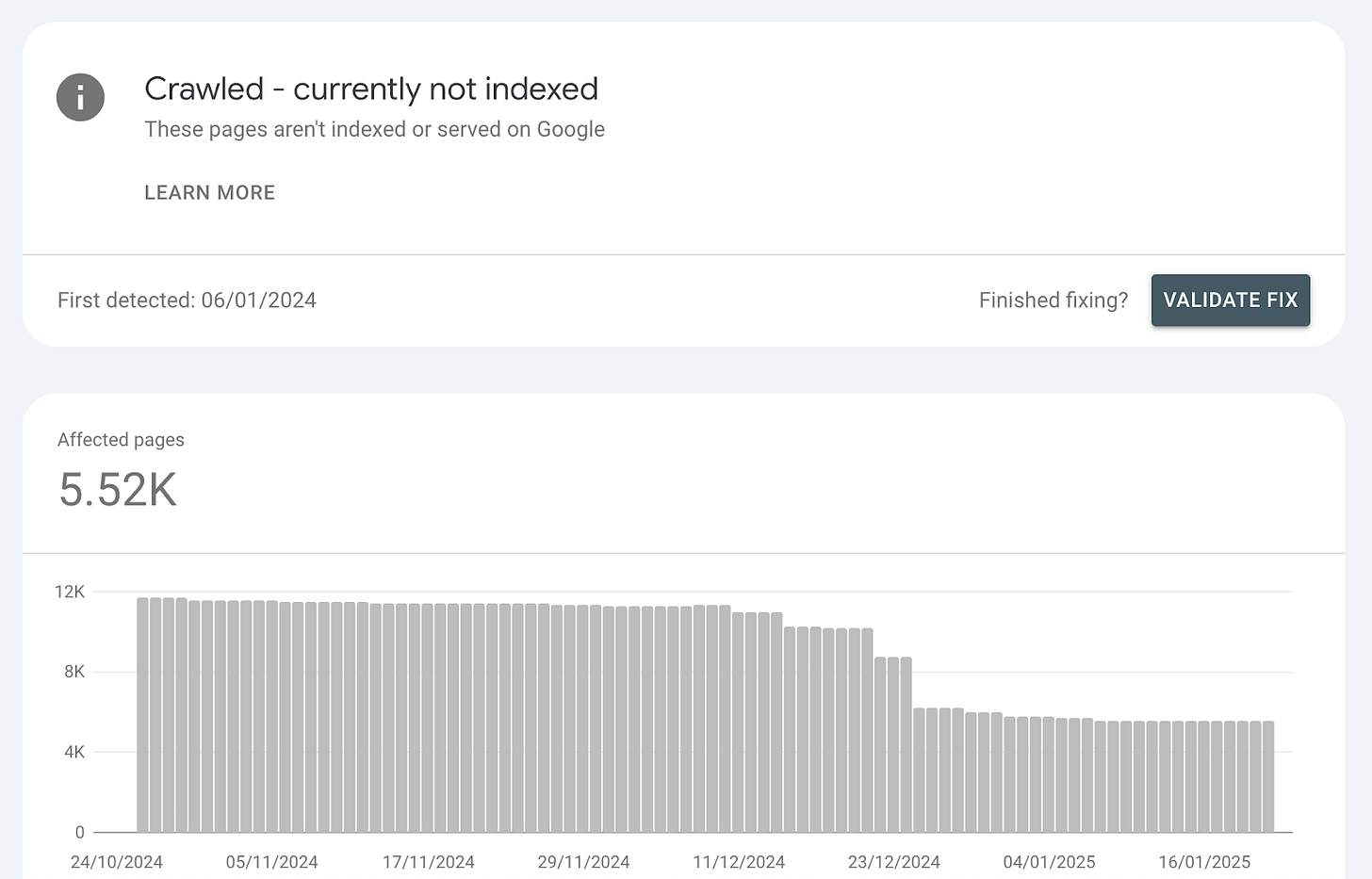

If you check the ‘crawled—currently not indexed’ report, you will see a decline in the total number of pages in this index state.

This shows that the page’s index state switched from ‘crawled - currently not indexed’ to ‘discovered - crawled currently not indexed’.

There is yet more evidence that index states can reverse or go backwards. And that index states indicate crawl and index priority within Google’s index.

BUT that is not the most interesting part of this report.

Indexing Insight has uncovered that Google Search Console isn’t being honest about the index states of pages that get hit by the core update.

Indexing Insight reporting spike in ‘URL is unknown to Google’

We will get different results if we rerun the same analysis in Indexing Insights as we did above.

Just like Google Search Console, if we consider indexing in terms of indexed vs. unindexed, nothing much changes after a Google core update.

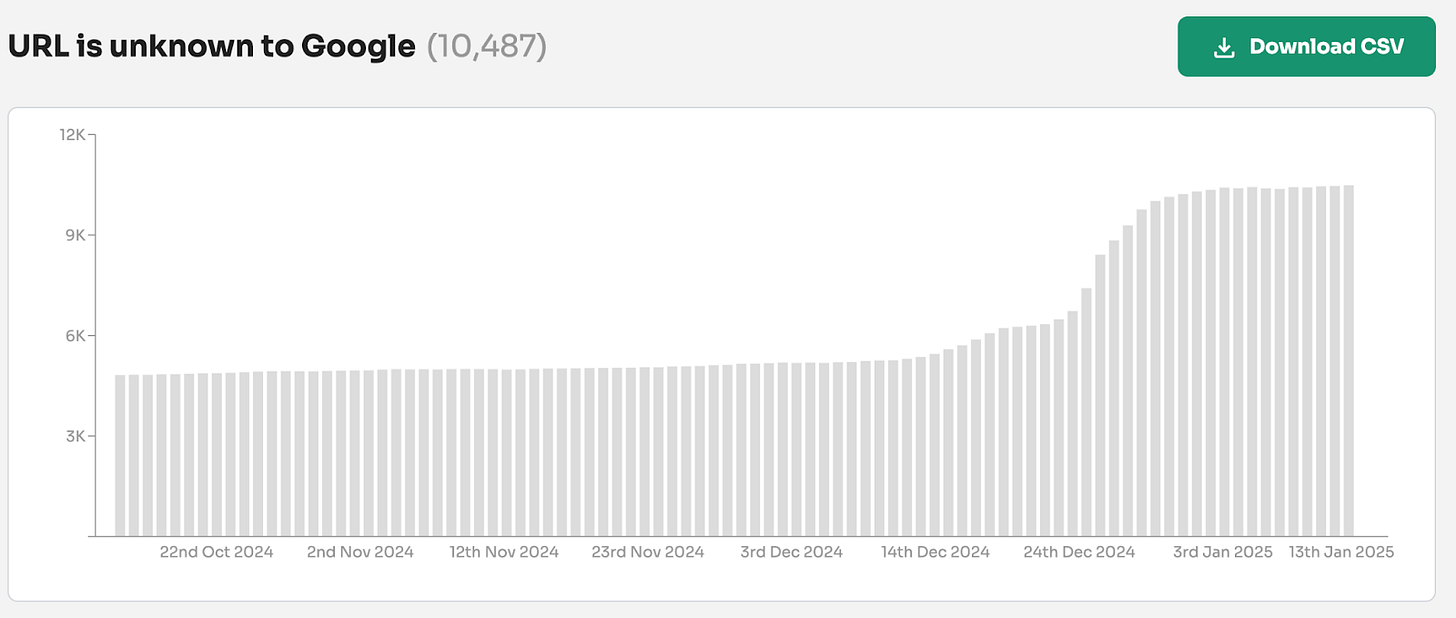

However, we get a very different result when we go to why pages aren’t being indexed.

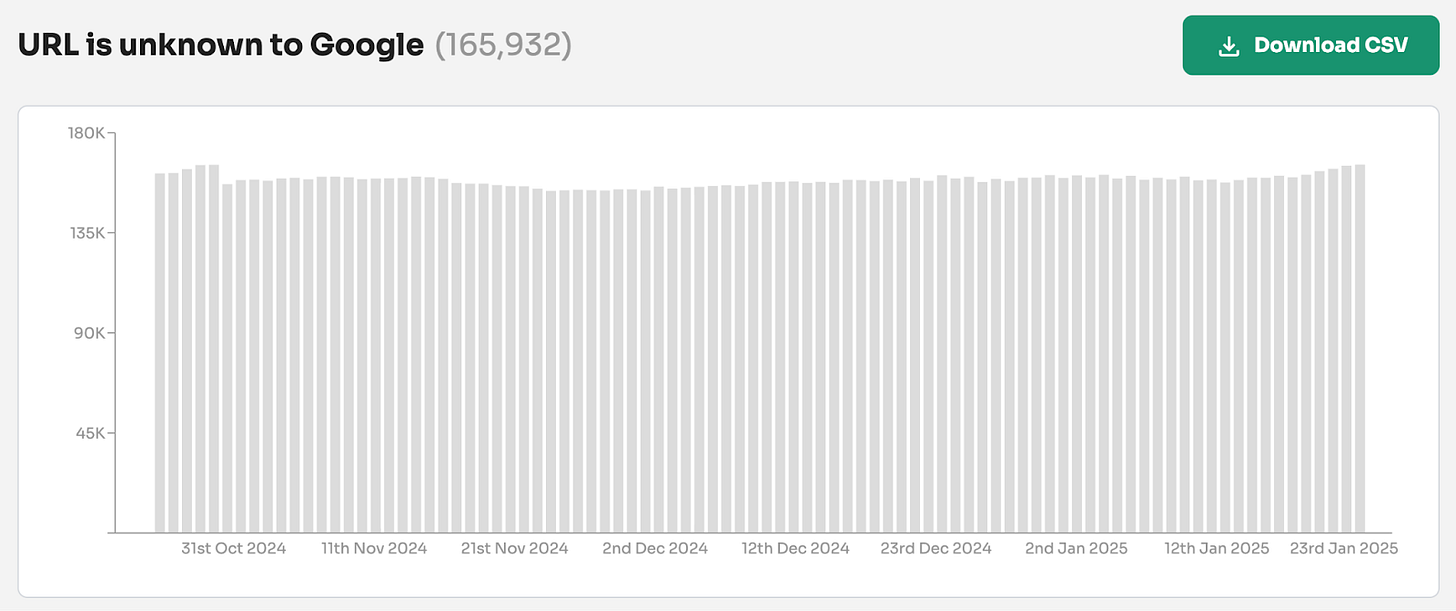

Instead of a sharp increase in ‘Discovered—crawled currently not indexed’, we see a sharp rise in pages with the index state ‘URL is unknown to Google’.

Checking the ‘Discovered - currently not indexed’ report has shown that there has been an increase in the total number of pages in this report.

But not the 11,000 URLs reported in Google Search Console. Only 747 URLs are in the report.

I was shocked by the difference in reporting. I did some analysis.

The manual GSC indexing report analysis

The analysis was pretty straightforward and designed to answer a basic question:

How many URLs were reported correctly in the 1,000 sample URLs in the report?

First, I performed some manual checks.

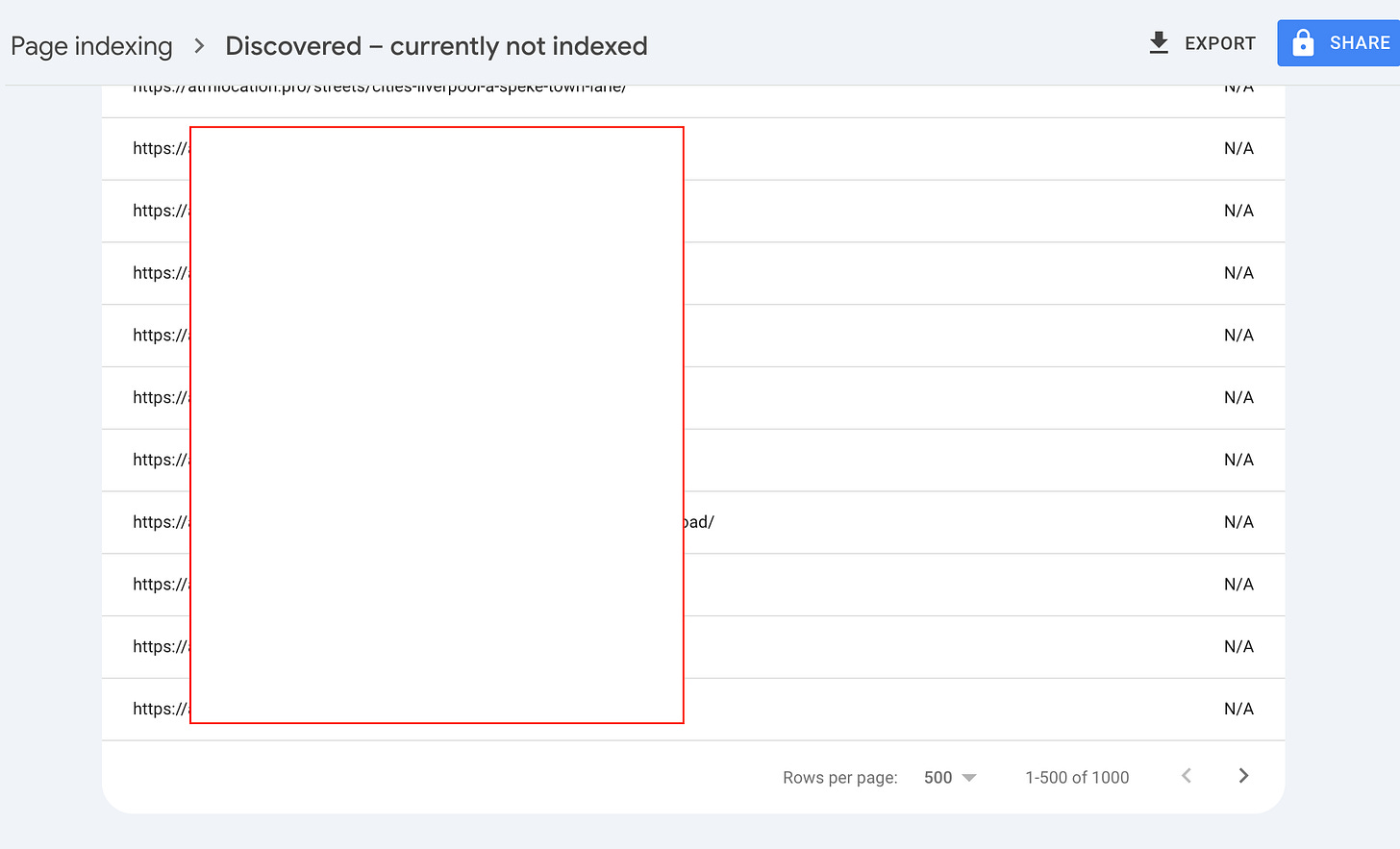

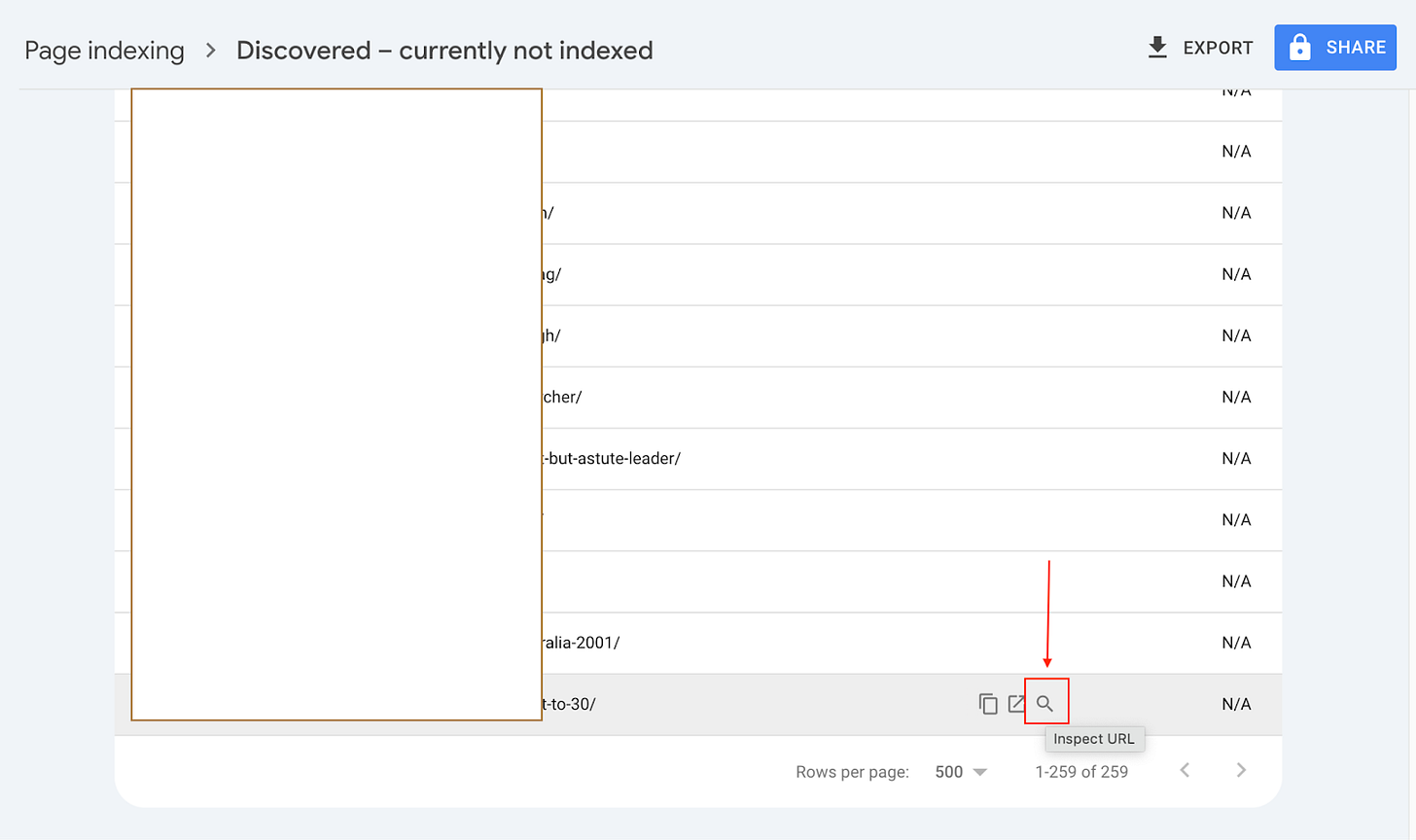

Then, I went to the sample URL list in the ‘Discovered—crawled currently not indexed’ section and changed the number of URLs shown to 500. I then scrolled to the bottom of page 1 of the report and started inspecting using the URL inspection tool in GSC.

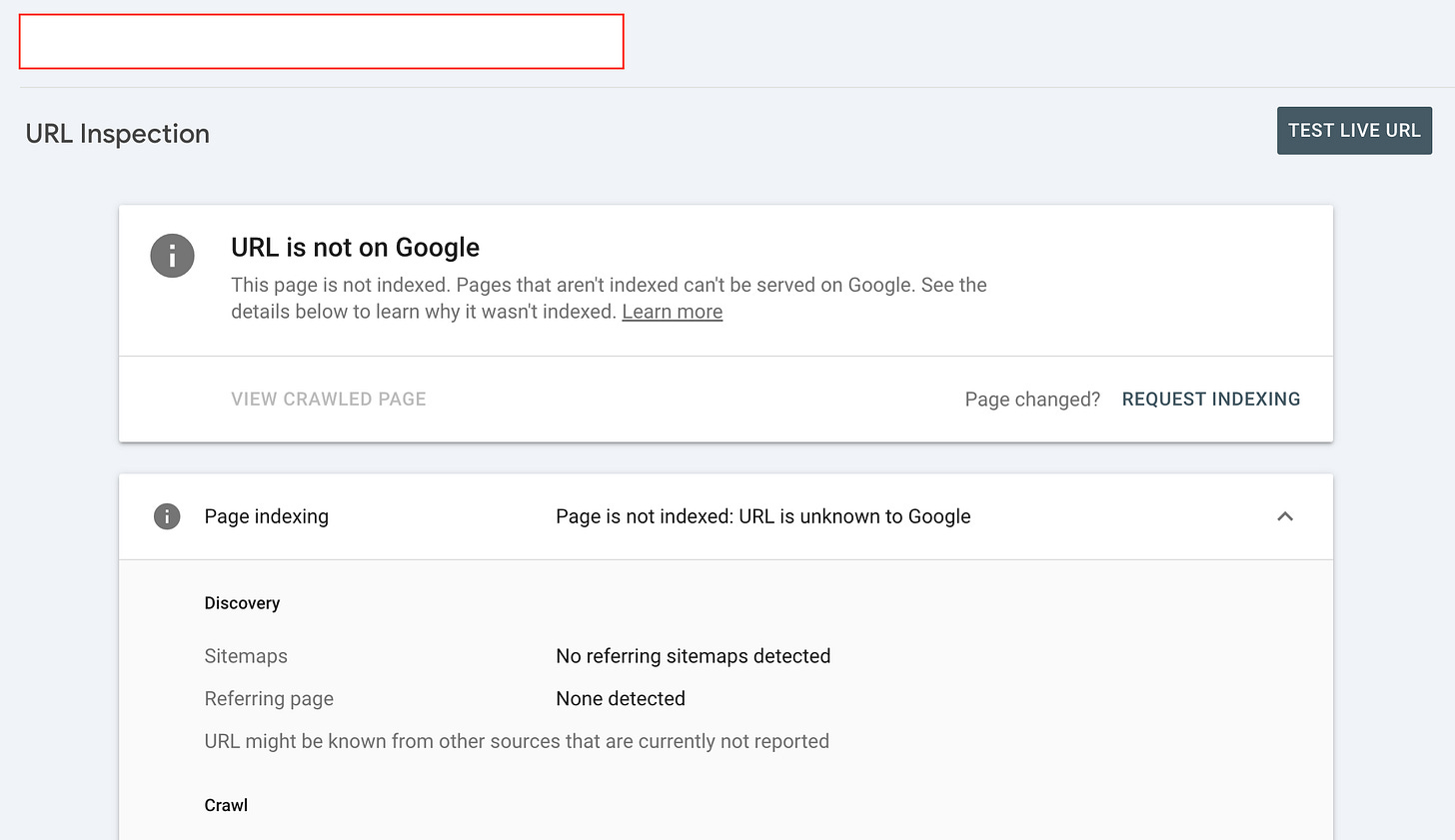

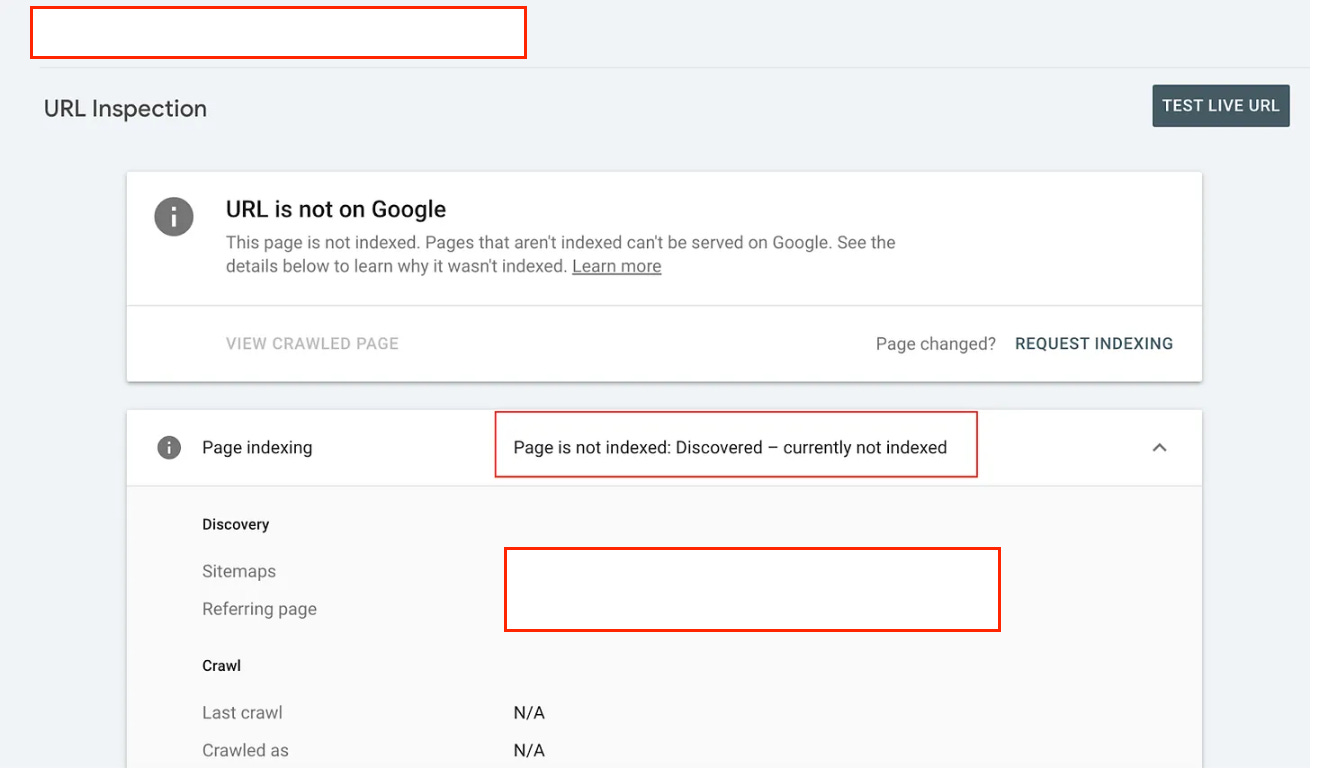

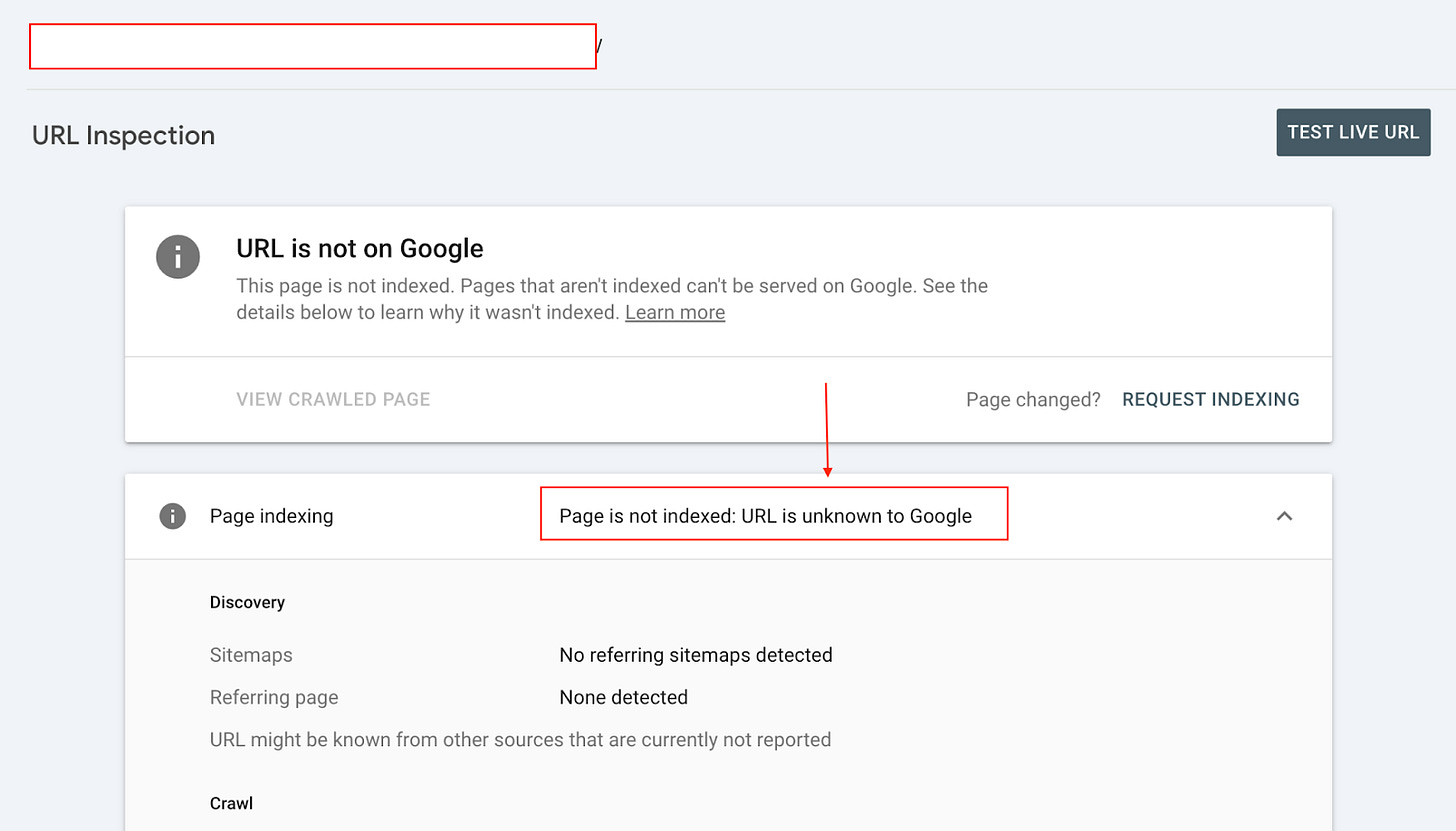

…and noticed that when you check the page with the URL Inspection tool, it shows the index state as ‘URL is unknown to Google’.

I did this 10 times from the bottom of the page to the top and inspected random URLs. They all returned with the same result: ‘URL is unknown to Google.

I then reversed the order in which I inspected URLs. And started at the top of the report and worked my way down (starting with the 1st result).

The result was different. Pages reported as being ‘Discovered - crawled currently not indexed’.

Something was happening, so I downloaded all the sample URLs and compared them to the indexing state found in Indexing Insight.

Random manual checks showed that there was misreporting in the Google Search Console.

So, I did more investigating.

Google Search Console misreporting data

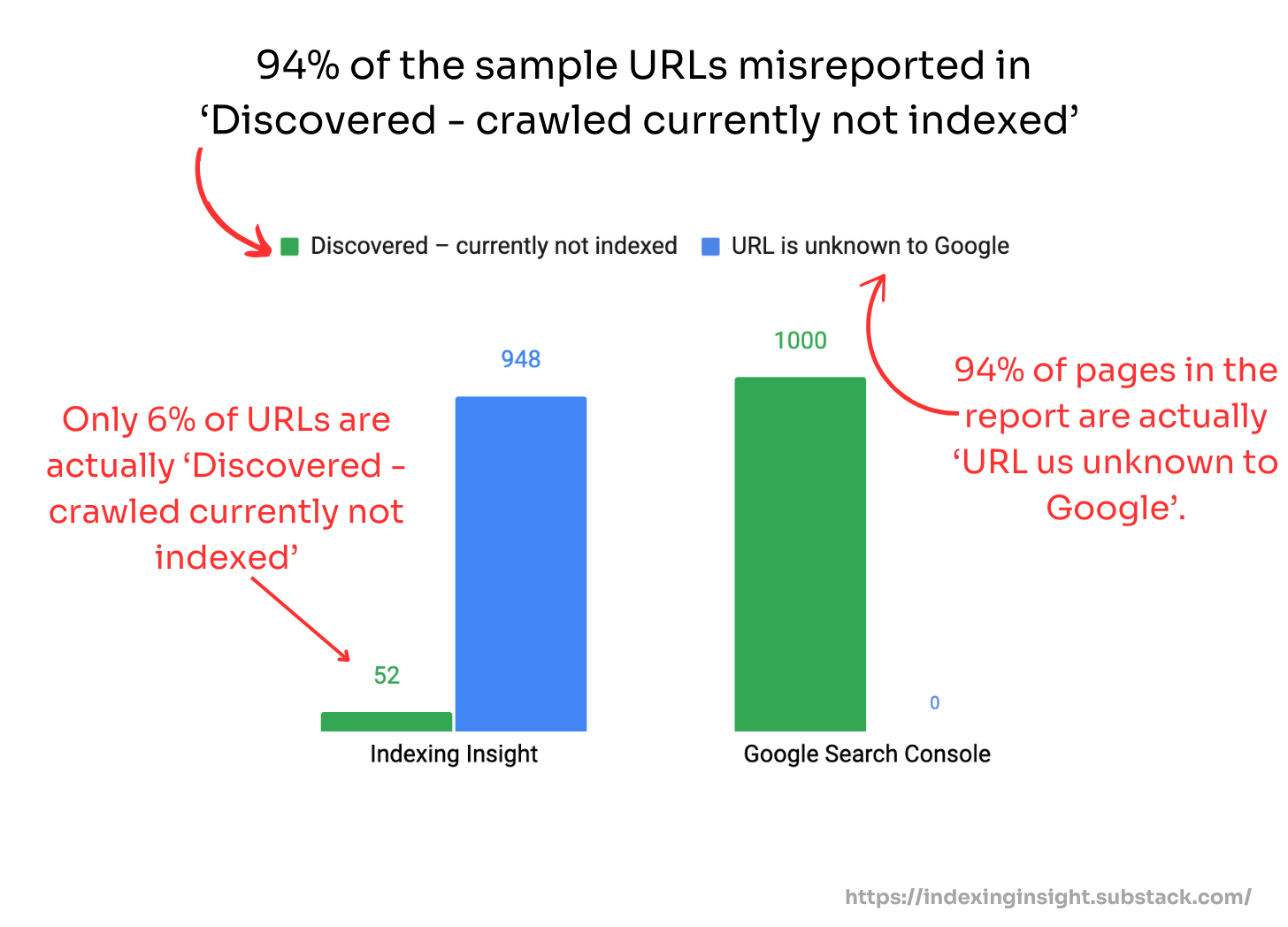

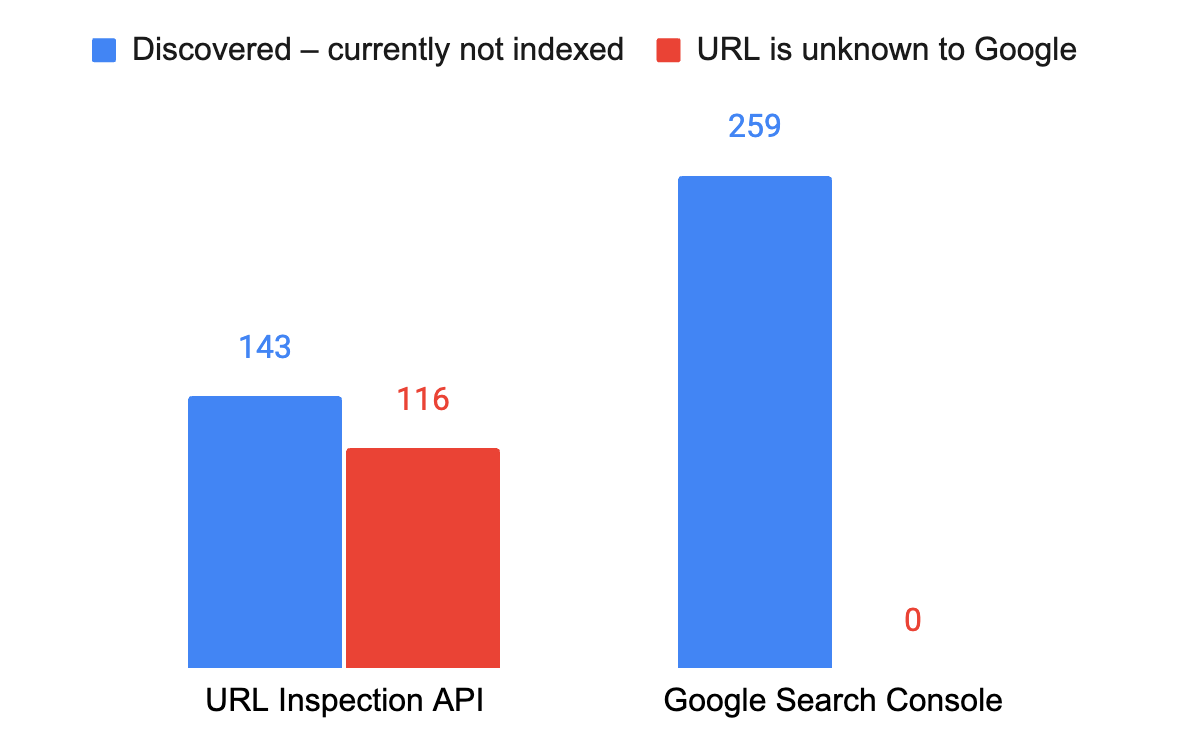

Next, I conducted a simple comparison between the page states reported in GSC vs Indexing Insight.

Indexing Insight was using the URL inspection API to fetch the index states of pages. And the URL Inspection tool is the most authoritative source of indexing data (according to Google).

I found that 94% of the index states are being misreported:

After a Google core update, I found that 94% of page indexing states are being misreported as ‘Discovered - crawled currently not indexed’ instead of ‘URL is unknown to Google’.

The sheer volume of URLs that were being misreported was staggering to me.

Over 90% of the pages in the ‘Discovered—crawled currently not indexed’ category are being misreported.

The question I asked myself is why?

⁉️ Why is GSC misreporting the data?

This misreporting is by design.

You might argue that it’s because of the page indexing delay. But the number of affected pages in the report graph in the ‘Discovered - currently not indexed’ is increasing week-by-week over 30-40 days.

Indicating the misreporting is done by design.

In the GSC backend, a rule does not show the report ‘URL is unknown to Google’ if those pages were previously crawled and indexed.

This is relatively simple for Google engineers to execute because we do something similar with ‘Crawled - previously indexed’ in Indexing Insight. And we only have 1 developer.

For Google to change the reporting rules, it should be easy peasy.

Instead, Google Search Console groups pages with the index states ‘URL is unknown to Google’ and ‘Discovered—currently not indexed’ together and shows sample URLs of both states in the report.

Why would their team do this?

I have a couple of theories, but the main one is that Google wants people to avoid thinking too hard about crawling and indexing. Remember, Google Search Console is designed for the average user. It’s not meant to be a deep technical analysis tool.

So, they will prioritise UX over technical reporting.

This is why they group historic pages with the index state ‘URL is unknown to Google’ under the ‘Discovered—currently not indexed’ report. Fewer reports mean fewer headaches (and questions).

Or it’s a bug. And the Google Search Console team will eventually fix it after it deals with other priorities.

Who really knows (outside Google)?

🤷 Why does this misreporting matter?

The change in index state indicates that Google is actively deprioritising the crawl priority of your pages, especially when Google core updates are rolled out.

But because there is no ‘URL is unknown to Google’ report, you are unaware of the change.

In a previous newsletter, How Index States Indicate Crawl Priority, I showed how the page indexing states could be reversed and how this can be mapped to crawl priority within Googlebot.

And ‘URL is unknown to Google’ is the lowest priority.

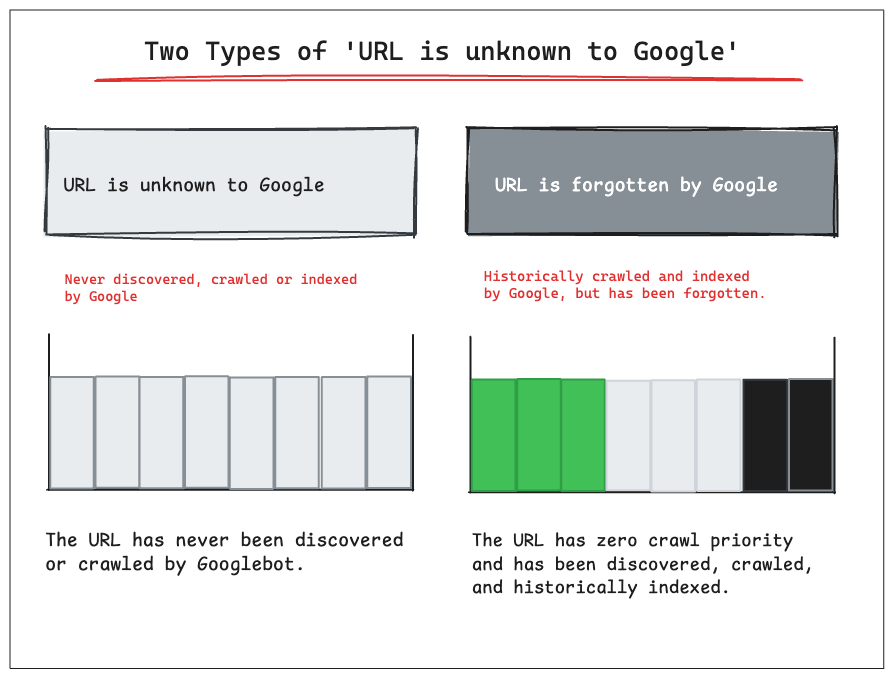

In another newsletter, What is ‘URL is unknown to Google’? I proved that pages within the ‘URL is unknown to Google’ index state have been previously crawled and indexed.

Update 27/01/2025: I updated the article based on Gary Illyes comments. Google can “forget” URLs as they purge low-value pages from their index over time.

To Googlebot they have not seen that URL before because of the signals gathered over time or lack of signals. So to Googlebot a historic URL can be “unknown” because it was so forgettable.

This means two types of ‘URL is unknown to Google’ exist:

URL is unknown to Google - The URL has never been discovered or crawled by Googlebot.

URL is forgotten by Google - The URL was previously crawled and indexed by Google but has been forgotten.

Finally, in another newsletter, I explained how Google core updates impact indexing.

Google core updates don’t just impact your rankings. They can also cause your pages to be removed from the index or deprioritise the crawl priority of your not indexed pages (changing the index state).

Why am I hammering home these three points?

Because each of these newsletters helps us build a picture of how the misreporting stacks:

🚨Previously indexed pages - Pages with the index state ‘URL is Unknown to Google’ that have been historically crawled and indexed by Google are forgotten.

🚨Google core updates impact indexing states - The Google core updates can cause your indexed pages to turn into not indexed pages. And your not indexed pages to change indexing state (and crawl priority).

🚨Grouped under ‘Discovered - currently not indexed’ - Previously crawled and indexed pages with the ‘URL is unknown to Google’ are grouped under the ‘Discovered - currently not indexed’ report.

All of this misreporting means that when you analyse your website in GSC, you might be led to believe your website has never been indexed. That it has a discovery and crawling problem.

This assumption is easy to make. But wrong.

This is why it is important to understand the difference between the different indexing states and be able to see historic indexing trends.

Your important submitted pages are likely being actively removed or deprioritised by Google’s index.

🤓 What does this mean for you (as an SEO)?

As an SEO, you need to be able to spot misreporting in the page indexing report.

The good news is that it’s relatively easy to spot misreporting in Google Search Console for important pages. However, the data you can download from the tool is limited.

Let me show you an example of finding ‘URL is unknown to Google’ pages in GSC.

Step 1: Filter on the submitted pages in the page indexing report

Go to the page indexing report in Google Search Console and filter on the submitted pages using XML sitemaps.

Step 2: Sort on pages in the ‘Why pages aren’t allowed’ section of the page indexing report

Scroll down to the bottom of the report and sort the pages by the largest number of URLs.

You should see the trend of total pages within each report. And if the total number of pages is increasing or decreasing.

Step 3: Go to the ‘Discovery - currently not indexed’ report and change rows per page to 500

Visit the ‘Discovered - currently not indexed’ report and scroll to the table of URLs. And change the total row from 10 to 500.

Step 4: Inspect the bottom pages in the list of URLs in the report

Then start inspecting the URLs at the bottom of the table.

When inspecting the pages using the URL inspection tool, you want to look for the indexing state ‘URL is unknown to Google’.

If you see a lot of pages with ‘URL is unknown to Google’, move into the final step.

Step 5: Compare the data in the Search Console and URL Inspection API

Finally, you need to download the sample list of URLs and compare them to the URL inspection API.

This is relatively easy to do using a tool like Screaming Frog. All you need to do is grab the sample list of URLs from the ‘Discovery - currently not indexed’ and enable the URL Inspection API in the tool.

Then, crawl the URLs using the list mode in the tool

Once the crawl is complete, you must compare the indexing states from the URL Inspection API with the page indexing report. You’re looking for the % difference between the indexing states.

For example, 44% of the pages we’re using in this example in the ‘Discovery - currently not indexed’ are actually ‘URL is unknown to Google’.

The problem is that if you want to find pages at scale, Google only allows you to download 1,000 URLs from any report. And you need to do this analysis manually.

But not with Indexing Insight.

Indexing Insight allows you to find pages that Google has actively deprioritised and moved into the ‘URL is unknown to Google’ report by monitoring Google indexing at scale. And you download all of this data.

So, you can focus on actioning the data rather than having to stitch it together.

📌 Summary

‘URL is unknown to Google’ is being misreported in Google Search Console.

In this newsletter, we’ve discussed how Google Search Console groups pages with the ‘URL is unknown to Google’ index state under the ‘Discovered - currently not indexed’.

This happens even when Google has previously crawled and indexed the pages.

Finally, we’ve reviewed a simple technique for identifying ‘URL is unknown to Google’ pages within the ‘Discovered - currently not indexed’ report.

📊 What is Indexing Insight?

Indexing Insight is a B2B SaaS tool designed to help you monitor Google indexing at scale. It’s for websites with 100K—1 million pages.

A lot of the insights in this newsletter are based on building this tool.

Subscribe to learn more about Google indexing and future tool announcements.